Using AI Automations On Type, On Save, and in CI

AI models are getting faster, smaller, and more capable. Isn't it about time we start using them more often, with our own hooks?

A common pattern in software development is running my linter or code formatter “on save”.

That means that every time I save a file while editing the code, the code automatically formats itself perfectly, exactly according to my strict code standards. So it automatically does things like:

Converting all single quotes to double quotes

Automatically spacing all the code perfectly

Automatically reformatting any lines over 120 chars long

Automatically importing types that I am using

And so on.

But we’re entering a new era where computers don’t just have to follow strict styling rules anymore. Computers, via LLMs, can help me accomplish a lot more, especially when it has context on my project, my intent, and the part of my workflow that I am in.

Deepseek and Gemini Flash show us that AI querying is going to become so cheap it is impossible to spend a substantial amount of money. Gemini Flash is now 10 cents per MILLION input tokens. You can think of that as roughly 10 cents per million words. And it is 40 cents per million output tokens. LLMs are basically free.

So here is my big claim for the future:

Text Editing Workflows should include custom LLM “hooks” for every step in my process.

What do I mean by this? Well, to understand, let’s just go through a few of these “hook” moments, one by one.

On Save:

Every time I press save on a file, an LLM should check my work. No, an army of LLMs should check my work, each with its own prompt. One of them should have context of the entire codebase, another one should just have the context of the file. One of them should be considering if I’m being creative and clever enough, another should ask if I’ve written the cleanest file possible. Every word should be inspected for meaning, every name of every variable, and every phrase. One LLM, called the jester, should just be playing little pranks in a pleasant and non-interruptive way, so I enjoy coding that much more.

When I save a file, this is a good chance to see if the file changes impact the rest of the codebase. One LLM could be entirely dedicated to this, and could start predicting every place in the codebase that I’m going to change next.

One should go in and replace any special comments prefixed with //ai: with whatever they suggest. Like //ai: suggest a solution for this.

A few of them should have access to the internet, and should just look things up on my behalf, to double check that I’m doing everything the right way.

One of them could automatically write or update unit tests for the modules I changed, while another could automatically write integration tests.

I could have an army of assistants, each one hyper-focused on some aspect of my work, constantly checking to see if they can be helpful to my goals, every time I save my file; and I should be the one to configure them!

On Type

Cursor already does this, and I can already give it a custom prompt. But why just one custom prompt, and why just one cursor agent? Why not several?

The point of On Type agents is to anticipate what you are going to say before you say it, and to suggest completions you hadn’t thought about.

These ones are somewhat hard to get right, and you don’t want to go overboard, but I imagine clever programmers could invent incredibly helpful on-type assistants.

On Commit

Honestly, my commit messages are so lazy these days. I should just use an AI to automatically categorize (with a gitmoji, perhaps?) and write my commit descriptions. It can put its basic description first, followed by my random human utterances like “this will never work” or “i did it” or “ugh” or whatever else it is I choose to put after git commit -m

In CI

AI should be a CI step.

The most obvious assistant here is a Pull Request Review assistant. A human should never be the first reviewer on a pull request anymore, especially not now that Gemini Flash 2.0 can fit 2 million tokens in context, which is larger than most codebases. An AI should obviously be the first to review every pull request, but there are probably other important things an AI could do in CI, like writing to a devlog or to some patch notes or release notes, or updating the Readme to make sure it’s up to date with the latest changes, if it needs to be, or another bot that just automatically writes unit tests

Maybe some of these things happen when a pull request is opened, but maybe other ones happen after any commit to main is made (like after a pull request is accepted and merged).

Every project could have a README bot. Every project could have a DEVLOG bot. Ever project could have better commit messages, faster pull request review, automatically updated Unit Tests.

All of this could be Close To Free and Open Source

To me, this just seems like configuration, right? I mean, there’s a lot of complication with the text editing APIs, to be sure, but I imagine an open source solution to help AI agents automatically and efficiently write changes to text files has already been built, and if it hasn’t, it wouldn’t be too hard to do using Bun or Node.

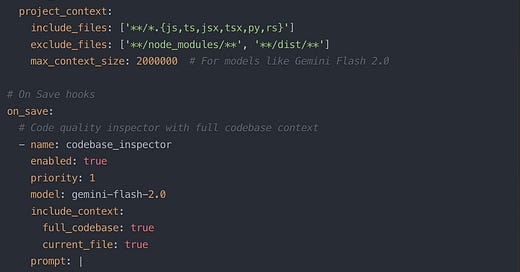

After that, it’s just YAML (god forbid) or JSON config, or whatever your config file of choice is.

Claude, could you please create a mock YAML file that would be used as configuration for all of these AI agent hooks? I should be able to deliver custom prompts for each hook, and each hook should provide me with a data attribute which let’s me use the specific data from that step inside my prompts.

This is so cool! Would love to demo this if you ever make it on basic telos web stuff